I am lost in a virtual world.

I am in a tiny house in a seaside town of Tuscany. I can hear a fire crackling next to me, and my brain tells me to move away. There is an organ playing in the distance, and I turn around and step outside onto the pebbled street. The music gets louder. I follow the sound of the organ, until I locate its source inside of a massive cathedral.

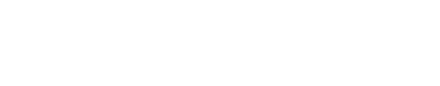

It may feel like I am wandering the streets of Tuscany, but I am actually sitting in a room in Sitterson Hall, home of UNC’s Department of Computer Science, with a giant Oculus Rift virtual reality headset on my face and a pair of headphones over my ears. Computer scientist Dinesh Manocha and his graduate student Carl Schissler are letting me listen to their latest research.

“When you move your head,” Manocha says, “it should track your movement and the sound should change, just like in the real world.”

It does.

This is a far cry from current sound effects. Manocha, in collaboration with computer scientist Ming Lin and many PhD students in UNC’s GAMMA group, hope to mimic real sound creation and the way sound travels through space. Their research is what allowed me to recognize that the organ music was coming from behind me and what allowed me to find its location using only my auditory senses.

Sound is dynamic. The way you hear it depends on factors like what it’s coming from, how it’s created, and whether there are obstacles in its way.

Current sound production technology for movies, video games, and virtual simulations doesn’t take these factors into account. Lin explains to me that while visual effects have improved drastically over the last 40 years, sound creation has barely changed. For example, sound artists often mimic the sound of helicopter blades by recording the blades of moving fans.

Lin, Manocha, and their team are hoping to change this with their research.

Disney, Intel, Samsung, Arup, and the U.S. Army are just a few entities that have inquired about UNC’s sound technologies and research. It has even lead to the creation, by former students, of a company called Impulsonic.

Both Lin and Manocha tell me that they are most proud of the work their students have accomplished.

Lin tells me how much of a risk it was for her students to forego good-paying jobs to start Impulsonic, which she and Manocha helped cofound.

Lin and Manocha have worked with visual and haptic—touch—simulations. Both agree that creating realistic audio has been one of the hardest projects they have ever worked on.

“Audio,” Lin says, “is, computationally, a challenging interface to work with.”

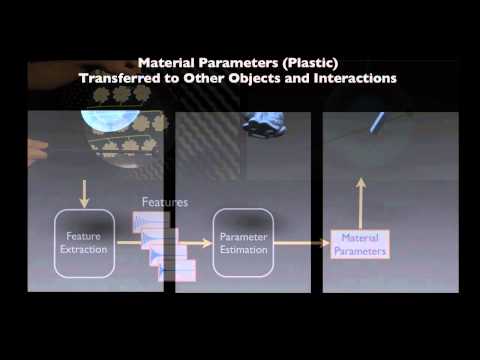

She’s leading research to assemble the first piece of sound creation: recreating the audio qualities of materials. Her team does this by studying what Lin calls “audio material cloning.”

For example, the sounds of a plastic bottle are unique. Tap a plastic bottle at the top, and you’ll notice it sounds different than when you tap it at the bottom.

Lin’s group replicates these changes by analyzing audio recordings and incorporating the properties they find into simulations. That way, if a user touches a simulated plastic bottle, a ceramic bowl, or a metallic sheet, the user can accurately recreate the sound based on where the material is touched.

Using this technology, Lin and her PhD students have been able to create virtual instruments that achieve the sound subtleties you can hear in real life based on where and how an instrument is played.

Manocha’s work solves the second part of making sound: accurately recreating the way sound moves through space, or propagates, and the way it is perceived by our brains.

He looks at factors like room shape, ceiling height, and the material of the walls to find out how they impact sound.

His group is also studying 3-D spatial sound. For example, your brain can tell when a sound is coming from your left versus your right, something known as localization. Or, how your mind can tell how fast and in what direction the source of a sound is moving.

It was this technology I was testing in the Tuscan town. And, it worked. Never before, when playing a video game or watching a movie, have I been able to pinpoint where a sound is coming from or gauge its distance from me.

The research of both professors has applications beyond video games and movies.

When designing concert and lecture halls, hospitals, or even offices and homes, it would be useful for architects to know how sound would carry through the space they’ve created. Would it be too loud? Too quiet? Lin and Manocha are collaborating with Arup, an engineering design firm, and Duda Paine, an N.C. architectural firm, to evaluate the acoustic characteristics of buildings before they are built. That way, a $3 million lecture hall does not turn into a $3 million waste.

In collaboration with computer science professor Gary Bishop, they are also working on a program that would help train visually impaired children to cross the street by accurately mimicking the sound of passing traffic.

Impulsonic is working on commercial products for gaming and virtual reality systems, as well as for architecture.

Virtual reality sound systems like the one I experienced can also train first responders or soldiers with the same audio sensory exposure they would have to deal with in real emergencies.

Manocha says that one major goal of the research is to compute the personalized head-related transfer function, or HRTF, that characterizes how each of us receives sound with our left and right ears.

“Imagine your prescription is different than my prescription,” he says. “The sound waves come, they hit your shoulders, your body, your face, and come differently to each of your ears. Your body is different than mine, so every person has a unique, personalized HRTF.”

Their technology would accurately recreate how sound would be received based on each person’s unique traits.

While Lin and Manocha are excited about their recent breakthroughs, they know they still have a lot of work ahead.

“It is a very challenging project,” Lin says. “But, intellectually, it is very stimulating to work on cutting-edge research no one else has been able to do.”